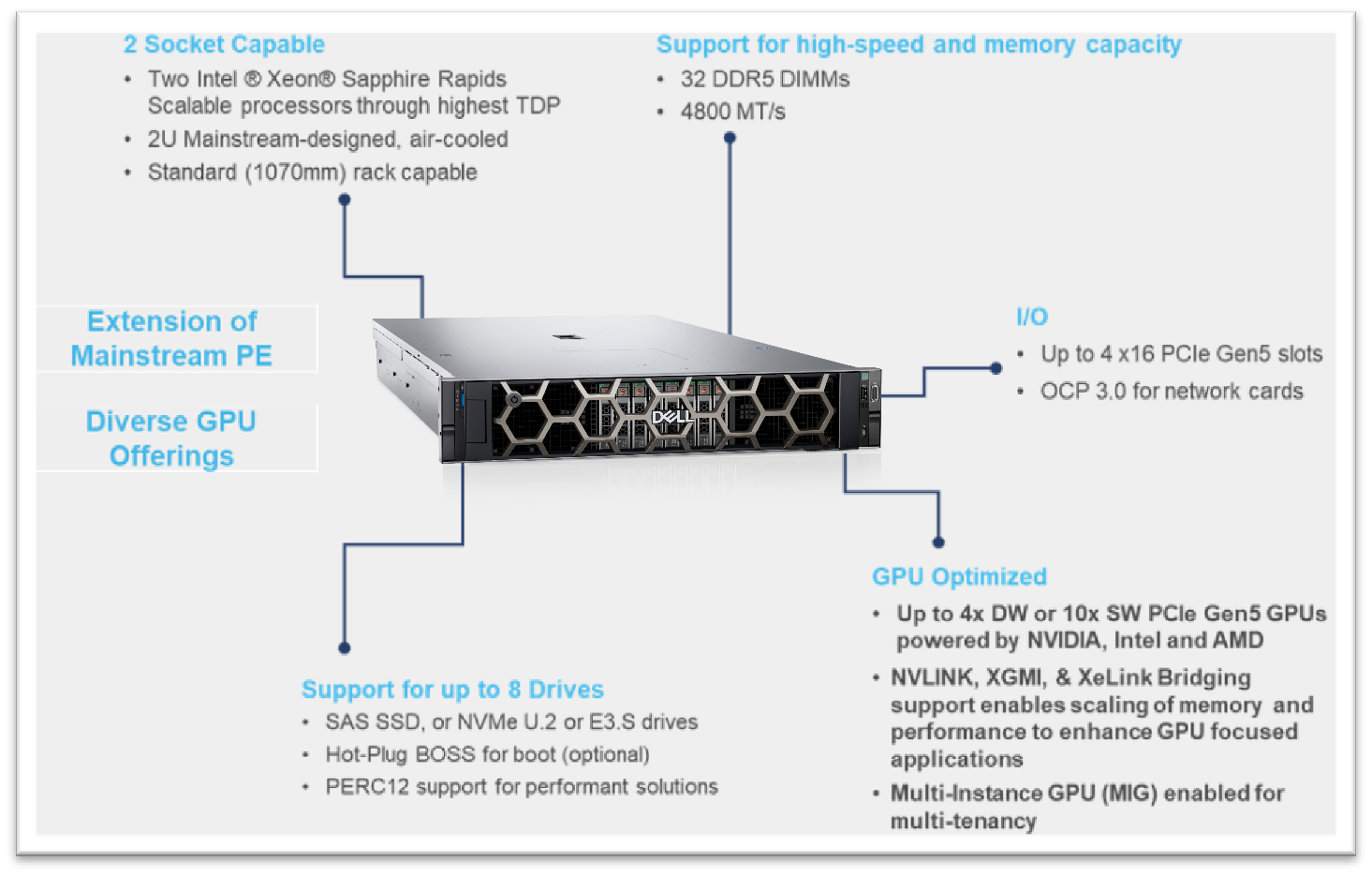

Llama 2 Efficient Fine Tuning Using Low Rank Adaptation Lora On Single Gpu Dell Technologies Info Hub

Fine-tuning requirements also vary based on amount of data time to complete fine-tuning and cost constraints. Llama2 is known for its ability to handle tasks generate text and adapt to different requirementsIt is. Single GPU Setup On machines equipped with multiple GPUs. In this blog we compare full-parameter fine-tuning with LoRA and answer questions around the strengths. Use the latest NeMo Framework Training container This playbook has been tested using the. Fine-tuning is a subset or specific form of transfer learning In fine-tuning the weights of the entire model. Here we focus on fine-tuning the 7 billion parameter variant of LLaMA 2 the variants are 7B 13B 70B and the. Fine-tune LLaMA 2 7-70B on Amazon SageMaker a complete guide from setup to QLoRA fine-tuning and deployment..

Chat with Llama 2 We just updated our 7B model its super fast Customize Llamas personality by clicking the settings button I can explain concepts write poems and code. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with. Llama 2 is available for free for research and commercial use This release includes model weights and starting code for pretrained and fine-tuned Llama. We have collaborated with Kaggle to fully integrate Llama 2 offering pre-trained chat and CodeLlama in various sizes To download Llama 2 model artifacts from Kaggle you must first request a using. Across a wide range of helpfulness and safety benchmarks the Llama 2-Chat models perform better than most open models and achieve comparable performance to ChatGPT..

Fine Tuning Llama 2 Models Using A Single Gpu Qlora And Ai Notebooks Ovhcloud Blog

Install Visual Studio 2019 Build Tool To simplify things we will use a one-click installer for Text-Generation-WebUI the program used to load Llama 2 with GUI. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the launch with comprehensive integration. Download Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. To download Llama 2 model artifacts from Kaggle you must first request a using the same email address as your Kaggle account After doing so you can request access to models. All three model sizes are available on HuggingFace for download Llama 2 models download 7B 13B 70B Llama 2 on Azure 16 August 2023 Tags Llama 2 Models All three Llama 2..

LLaMA-65B and 70B performs optimally when paired with a GPU that has a. Mem required 2294436 MB 128000 MB per state I was using q2 the smallest version That ram is going to be tight with 32gb. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging. This release includes model weights and starting code for pretrained and fine-tuned Llama language models Llama Chat Code Llama ranging from 7B. Loading Llama 2 70B requires 140 GB of memory 70 billion 2 bytes In a previous article I showed how you can run a 180-billion-parameter..

Komentar